By Alan Ifould

Data collection and analysis, more specifically, turning data into useful information and actionable insight, is the necessary foundation for any progress toward the benefits promised by Smart Manufacturing and Industrie 4.0. We are only just entering the Fourth Industrial Revolution, where automated manufacturing tools and processes will self-optimize, based on artificially intelligent data insights. In fact, the Industry 4.0 Smart Manufacturing Adoption Report 2020*, found that although North America emerged as the global leader, only 36% of organizations have rolled out Industry 4.0 technologies. Among the barriers to be surmounted are old paradigms and habits that needlessly constrain the flow of data. This is not to suggest that data should be openly available to all.

In a complex technical enterprise like semiconductor manufacturing, suppliers’ ability to differentiate themselves from their competition by their superior knowledge of processes and applications can be a critical competitive advantage. More importantly, that same domain knowledge has great value for customers, who rely on their suppliers’ ability to solve problems based on their expertise. The dilemma arises when the customer asserts proprietary over the knowledge of the supplier. “If you learned it here, I own it.” There are certainly times when such an assertion is justified, as when the knowledge is the result of a collaboration, especially one that involves significant investment by the customer. Other cases are not so clear.

A strong argument can be made that overzealous assertion of proprietary rights stifles innovation and that the industry is better served by turning hard-won domain knowledge into better products and improved support.

Imagine this case: When a system is down, one of the first comments a service engineer hears is something like, “Surely this can’t be the first time you’ve seen this. You must have seen it somewhere before.” If it is your system that is down, you just want it fixed. There is an expectation the service engineer has access to the domain knowledge needed to solve the problem and get your operations back up. The situation would be different of the engineer was prevented from using knowledge learned in another operation.

We have our share of habitual biases, among them a pervasive resistance to sharing data, even in situations where the potential benefits to both individual companies and the industry as a whole are quite apparent. It is time to reconsider these biases thoughtfully, carefully weighing the potential risks and benefits.

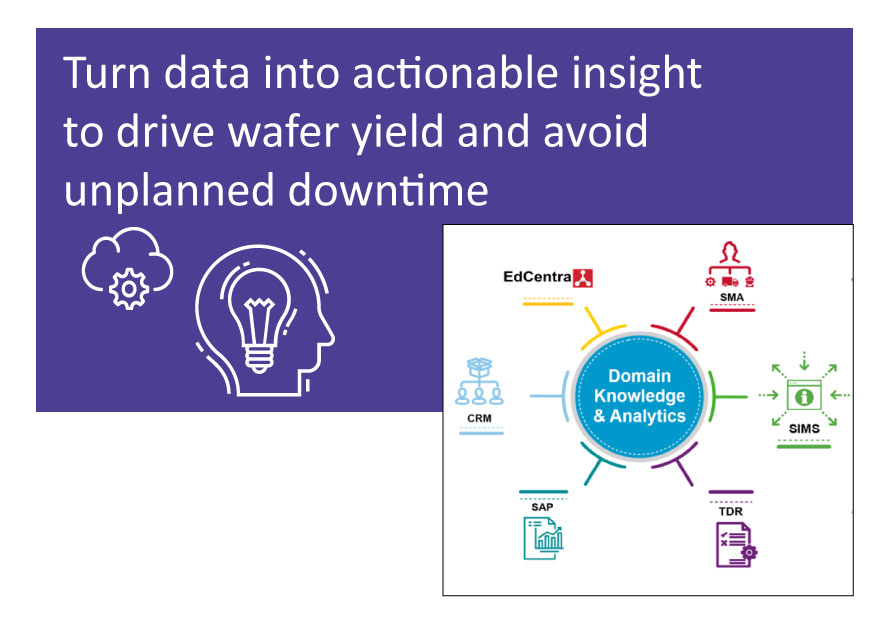

By way of example, we describe our own experience at Edwards in transitioning from a relatively primitive (sometimes literally pencil and paper) system for tracking service and support activities to a cloud-based service management application (SMA) that captures detailed data from support activities and makes it readily available for analysis across an installed base of thousands of systems.

Why move Service Management Application to the cloud?

In the beginning, while a few locations were still reliant on transcription from paper records, a more typical situation was an on-premises application, physically limited to a particular site.

- The on-premises application enabled local operations managers to understand site performance but did not enable them to understand differences in performance across sites

- Data tended to be extracted from the on-premises application and shared only when there was a problem – a reactive approach

- When needed, the data was typically extracted by customers and shared via e-mail or a file sharing application

- System improvement uploads typically had slow, prolonged rollouts

It was clear that moving the system to the cloud would simplify the management of user access, speed-up the deployment of system upgrades and improvements, and enable data analytics across sites. However, it was critical that we ensure the security of data that was deemed sensitive or confidential by either our customers or Edwards.

What benefits has the new platform brought?

We are now nearly a year beyond the initial roll-out of the cloud-based app and already benefits have accrued to our customers

- The ability to understand local (by site) operational performance remains the same for local operational managers with the added benefit of daily, centrally developed reports and dashboard updates that eliminate the need to generate their own reports from the data.

- Global visibility enables us to see differences in times and effort to complete maintenance activities, to find common failure modes, and to determine when specific systems or applications are having a higher proportion of corrective actions than others in similar uses.

- This enables us to see operational differences and see how we can provide the most efficient and effective procedures and training; it also enables our technical teams to see and prioritize which areas of product performance require review.

All of this sets the foundation for an operational excellence approach, where performance, reliability and the maintenance effort is optimized to achieve the best outcome and release more value across the Fab. Overcoming customer concerns

Many customers were initially resistant to our request to put this data into an off-premises, cloud-based application. In most cases (though not all) their initial reluctance disappeared after a thoughtful discussion of exactly what data we were interested in and how it would be used and protected. We normalize process names and definitions to enable comparisons between like processes and products and anonymise the source. Access to the database and the analytics is restricted to authorised users and all access requests are tracked and managed. Local site personnel cannot access data from other sites. Arguably this cloud-based data exchange is far more secure than the email transfers that were common before, often made under pressure and as a reaction to activity surrounding a down event.

Towards a more rational approach

In the SMA case the benefits are clear. For example, it certainly is easier to spot a local anomaly in a view of the entire installed base than in a view restricted to a small number of local systems – the statistics are undeniable. What is less clear is whether we can justify a more general sharing of information based on this case alone. After all, the data we wanted to share was data we already had. Perhaps more importantly, it was data related to the performance of Edwards’ critical systems and personnel. Customers will justifiably be more concerned about losing control of data that relates directly to the performance of their own processes. Even in these cases we believe that, when a clear benefit to the customer exists, most of these concerns can be addressed by carefully considered contractual agreements and technical security measures.

As a supplier for whom developing and affording the benefit of superior domain expertise is a core value, we at Edwards would advocate strongly for a more considered and thoughtful approach to data sharing. For customers to benefit from the expertise of their suppliers, they must be ready to enable its development. A rising tide floats all boats.We explore some practical considerations for data sharing and a customer use case in our 10 minute on-demand web cast “Data- Who cares? Turning data into actionable insight”. Watch web cast.

Acknowledgements – I want to thank my colleagues Phil Ware and Neil Conden for their valuable insights and contributions to this discussion. *Industry 4.0 Smart Manufacturing Adoption Report 2020 (iot-analytics.com)