MANOUCHEHR RAFIE, Ph.D., Gyrfalcon Technology Inc. (GTI), Milpitas, CA

Today’s societies are becoming ever more multimedia-centric, data-dependent and automated. Autonomous systems are hitting our roads, oceans and air space. Automation, analysis and intelligence is moving beyond humans to “machine-specific” applications. Computer vision and video for machines will play a significant role in our future digital world. Millions of smart sensors will be embedded into cars, smart cities, smart homes and warehouses using artificial intelligence. In addition, 5G technology will be the data highways in a fully connected intelligent world, promising to connect everything from people to machines and even robotic agents – the demands will be daunting.

The automotive industry has been a major economic sector for over a century and it is heading towards autonomous and connected vehicles. Vehicles are becoming ever more intelligent and less reliant on human operation. Vehicle to vehicle (V2V) and connected vehicle to everything (V2X), where information from sensors and other sources travels via high-bandwidth, low-latency, and high-reliability links, are paving the way to fully autonomous driving. The main compelling factor behind autonomous driving is the reduction of fatalities and accidents. Realizing that more than 90% of all car accidents are caused by human failures, self-driving cars will play a crucial role in accomplishing the ambitious vision of “zero accidents,” “zero emissions” and “zero congestion” of the automotive industry.

The only obstacle is vehicles must possess the ability to see, think, learn and navigate a broad range of driving scenarios.

The market for automotive AI hardware, software, and services will reach $26.5 billion by 2025, up from $1.2 billion in 2017, according to a recent forecast from Tractica (Figure 1). This includes machine learning, deep learning, NLP, computer vision, machine reasoning, and strong AI. Fully autonomous cars could represent up to 15% of passenger vehicles sold worldwide by 2030, with that number rising to 80% by 2040, depending on factors such as regulatory challenges, consumer acceptance, and safety records, according to a McKinsey report. Autonomous driving is currently a relatively nascent market, and many of the system’s benefits will not be fully realized until the market expands.

AI-defined vehicles

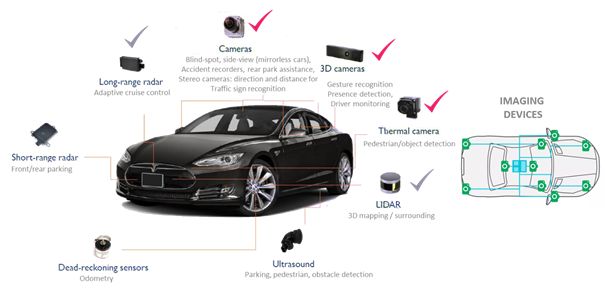

The fully autonomous driving experience is enabled by a complex network of sensors and cameras that recreate the external environment for the machines. Autonomous vehicles process the information collected by cameras, LiDAR, radar and ultrasonic sensors to tell the car about its distance to surrounding objects, curbs, lane markings, visual information of traffic signals and pedestrians.

Meanwhile, we are witnessing the growing intelligence of vehicles and mobile edge computing with recent advancements in embedded systems, navigation, sensors, visual data and big data analytics. It started with Advanced Driver Assistance Systems (ADAS), including emergency braking, backup cameras, adaptive cruise control and self-parking systems.

Editor’s Note: Read the full article by clicking here. The full article originally appeared in the January/February issue of Semiconductor Digest.