By Kaustubh Gandhi, Senior Product Manager, Bosch Sensortec

Artificial intelligence (AI) is making headlines everywhere, offering a range of capabilities, including location and motion awareness — determining whether a user is sitting, walking, running or sleeping. Behind the scenes, AI is capturing volumes of data. Makers of smartphones and fitness and sports trackers, along with application developers, are all clamoring for this data because it helps them analyze real-world user behavior in depth. Manufacturers gain a competitive edge by tapping this intelligence: Using it to improve user engagement, they increase the perceived value of their devices, potentially reducing customer churn.

How can consumer-product manufacturers tap the built-in capabilities of MEMS inertial sensors — which are already ubiquitous in end-user devices — to make the most of AI?

Machine learning

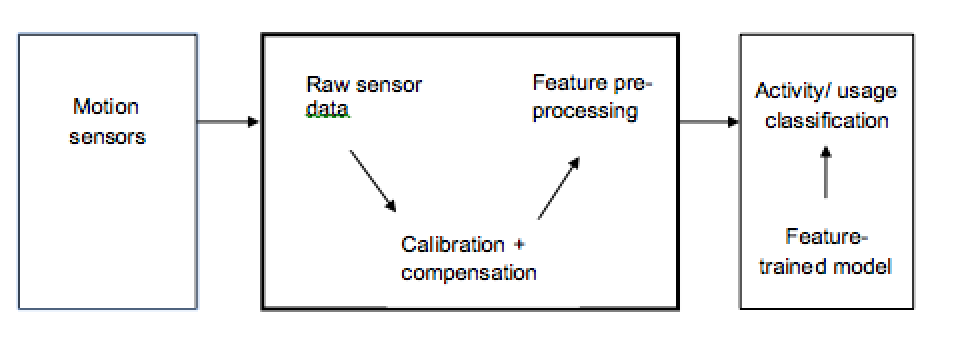

Product manufacturers can easily build an activity classification engine using commonly available smart sensors and open-source software. Activity trackers, for example, use raw data first collected via the MEMS inertial sensors that are already installed in smartphones, wearables and other consumer products.

With the building blocks in place, consumer-product manufacturers can apply machine learning techniques to classify and analyze this data. There are several possible approaches, ranging from logistic regression to deep learning neural networks.

One well-documented method used for classifying sequences in AI is Support Vector Machines (SVM). Physical activities, whether walking or playing sports, consist of specific sequential repetitive movements that MEMS sensors gather as data. MEMS sensors make good use of this collected data, which can be easily processed into well-structured models that are classifiable with SVMs.

Consumer-product manufacturers have gravitated toward the SVM model since it is easy to use, scale and predict. Using an SVM to set up multiple simultaneous experiments for optimizing classification over diverse, complex real-life datasets is far simpler than with other approaches. An SVM also introduces a wide range of size and performance optimization opportunities for the underlying classifier.

Cost impacts of processing, storage and transmission

In practice, recognizing user activity hinges on accurate live classification of AI data. Therefore, the key to optimizing product cost is to balance transmission, storage and processing costs without compromising classification accuracy.

This is not as simple as it sounds. Storing and processing AI data in the cloud would leave users with a substantial data bill. A WiFi, Bluetooth or 4G module would drive up device costs and require uninterrupted internet access, which is not always possible.

Relegating all AI processing to the main processor would consume significant CPU resources, reducing available processing power. Likewise, storing all AI data on the device would push up storage costs.

Resolving the issues

To resolve these technology conflicts, we need to do four things to marry the capabilities of AI with MEMS sensors.

First, decouple feature processing from the execution of the classification engine to a more powerful external processor. This minimizes the size of the feature processor size while eliminating the need for continuous live data transmission.

Next, reduce storage and processing demands by deploying only the features required for accurate activity recognition. In one example created by UC Irvine Machine Learning Repository (UCI), when an AI model was trained using a dataset of activities with 561 features, it identified user activity with an accuracy of 91.84 percent. However, using just the 19 most determinative features, the model still achieved an impressive accuracy of 85.38 percent. Notably, pre-processing alone could not identify these determinative features. Only sensor fusion enabled the data reliability required for accurate classification.

Third, install low-power MEMS sensors that can incorporate data from multiple sensors (sensor fusion) and enable pre-processing for always-on execution. A low-power or application-specific MEMS sensor hub can slash the number of CPU cycles that the classification engine needs. The onboard software can then directly generate fused sensor outputs at various sensor data rates to support efficient feature processing.

Finally, retrain the model with system-supported data that can accurately identify the user’s activities.

Additionally, cutting the data capture rate can reduce the computational and transmission resource requirements to a bare minimum. Typically, a 50 Hz sample rate is adequate for everyday human activities. This may soar, however, to 200 Hz for fast-moving sports. Reducing dynamic data rate selection and processing in this way lowers manufacturing costs while making the product lighter and/or more powerful for the consumer.

High efficiency in processing AI data is key to fulfilling its potential, driving down costs and delivering the most value to consumers. MEMS sensors, in combination with sensor fusion and software partitioning, are critical to driving this efficiency. Operating at very low power, MEMS sensors simplify application development while accurately analyzing motion sensor data.

Combining AI and MEMS sensors into a symbiotic system promises a new world of undreamt-of opportunities for designers and end users.

Based in Reutlingen, Germany, Kaustubh Gandhi is responsible for the product management of Bosch Sensortec’s software. For more information, visit: https://www.bosch-sensortec.com/

This blog post is based on an original article that first ran in EDN. It appears here with the permission of the publisher.