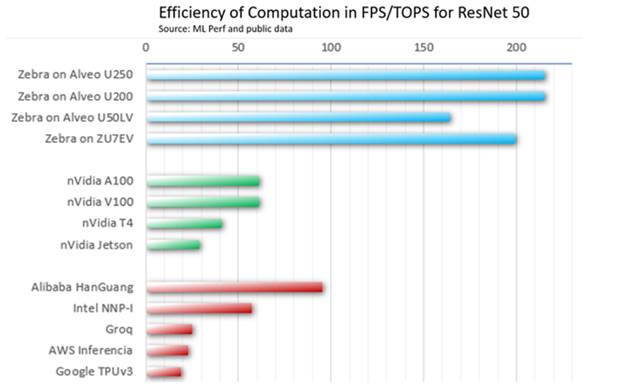

Machine learning software innovator Mipsology today announced that its Zebra AI inference accelerator achieved the highest efficiency based on the latest MLPerf inference benchmarking. Zebra on a Xilinx Alveo U250 accelerator card achieved more than 2x higher peak performance efficiency compared to all other commercial accelerators.

“We are very proud that our architecture proved to be the most efficient for computing neural networks out of all the existing solutions tested, and in ML Perf’s ‘closed’ category which has the highest requirements,” said Ludovic Larzul, CEO and founder, Mipsology. “We beat behemoths like NVIDIA, Google, AWS, and Alibaba, and extremely well-funded startups like Groq, without having to design a specific chip and by tapping the power of FPGA reprogrammable logic. Perhaps the industry needs to stop over-relying on only increasing peak TOPS. What is the point of huge, expensive silicon with 400+ TOPS if nobody can use the majority of it?”

Peak TOPS have for years been the standard for measuring computation performance potential, so many assume that more TOPS equal higher performance. However, this fails to take into consideration the real efficiency of the architecture, and the fact that at some point there are diminishing returns. This phenomenon, similar to “dark silicon” for power, occurs when the circuitry can simply not be used because of existing limitations. Zebra has proven to scale along with TOPS, maintaining the same high efficiency while peak TOPS are growing.

With a peak TOPS of 38.3 announced by Xilinx, the Zebra-powered Alveo U250 accelerator card significantly outperformed competitors in terms of throughput per TOPS and ranks among the best accelerators available today. It delivers performance similar to an NVIDIA T4, based on the MLPerf v0.7 inference results, while it has 3.5x less TOPS. In other words, Zebra on the same number of TOPS as a GPU would deliver 3.5x more throughput or 6.5x higher than a TPU v3. This performance does not come at the cost of changing the neural network. Zebra was accepted in the demanding closed category of MLPerf, requiring no neural network changes, high accuracy, and no pruning or other methods requiring user intervention. Zebra achieves this efficiency all while maintaining TensorFlow and Pytorch framework programmability.

“Mipsology’s Zebra AI inference acceleration on the Xilinx Alveo platform gives developers a solid differentiator in neural network inference computation,” said Ramine Roane, vice president of AI & Software at Xilinx. ” Zebra empowers our adaptive Alveo platforms with more compute efficiency than any other products, including GPUs.”

MLPerf has been the industry benchmark for comparing the training performance of ML hardware, software and services since 2018, and inference performance since 2019.