ROHIT BHAN, Senior Staff Electrical Engineer with Renesas Electronics America and VATCHE SOUVALIAN, senior principal solutions engineer at Cadence Design Systems.

Why do semiconductor engineers use modeling when we can work with real circuits and schematic? At the most basic level, models help us to predict a design’s behavior and performance and provide us with an approach that ensures designs meet specific requirements. Models allow for the identification of design flaws or inconsistencies early in the development process, even before the actual design is done, which reduces the risk of “bugs” later in the development process. Equally important, models facilitate communication among different teams (e.g., design, engineering, testing) by providing a common framework and visual representation of the design, and thus help evaluate potential risks associated with the design, enabling teams to make informed decisions and implement mitigation strategies. Lastly, models can assess the performance of a design, including factors like speed, efficiency, and resource usage, helping to optimize the system before implementation.

While modeling is a powerful tool, modeling techniques can also present several challenges and issues. The most common issues include:

- Complexity: Creating accurate models for complex systems can be challenging. As systems grow in complexity, models can become difficult to develop, validate, and interpret.

- Assumptions and Simplifications: Models often rely on assumptions or simplifications that may not hold true in all situations, leading to inaccuracies in predictions and outcomes.

- Data Dependency: Effective modeling requires high-quality data. Incomplete, inaccurate, or biased data can significantly affect model performance and reliability.

- Computational Resources: Some models, especially those involving simulations of large systems, can require significant computational resources, making them time-consuming and expensive to run.

- Validation and Verification: Ensuring that a model accurately represents the real-world system can be difficult. Verification (checking that the model is implemented correctly) and validation (confirming that the model accurately reflects reality) are critical, but challenging processes.

- Changing Conditions: Models may become outdated as conditions change (e.g., technological advancements, market shifts, or environmental changes), necessitating regular updates and recalibrations.

What is simulation?

Simulation refers to the use of computational models to mimic the behavior of a system or circuit. By simulating a design, engineers can check whether it functions as intended under various conditions, without needing to physically build the prototype. Simulation helps detect potential issues early in the design phase, reducing time, cost, and risk. Several types of simulations are used depending on the design and the stage of development.

Behavioral simulation focuses on verifying the high-level behavior of a design, ensuring that it responds correctly to inputs and outputs before diving into detailed implementation, while functional simulation checks that the design’s logic, such as the behavior of logic gates and their interconnections, meets the specifications. Timing simulation verifies that the system operates within timing constraints, ensuring that setup and hold times, as well as signal propagation delays, are met. Signal integrity simulation ensures the quality of signals in high-speed designs by checking for degradation, reflections, and crosstalk that could affect performance.

A “Monte Carlo” simulation is a statistical approach used to assess the design’s robustness under various random variables, such as component tolerances and environmental factors. Power and thermal simulations validate that the design adheres to power consumption limits and thermal dissipation requirements, preventing overheating and ensuring efficiency. Finally, post-layout simulation is performed after the physical layout of the design is completed, verifying that parasitic effects like capacitance and resistance introduced by the layout do not negatively impact the system’s behavior. Each simulation type is crucial at different stages of the design process to ensure the system works reliably and efficiently under real-world conditions.

Digital simulators are software tools used to model, simulate, and analyze the behavior of digital circuits and systems before physical implementation. These simulators are essential in the design and verification process of digital systems, allowing engineers to simulate, test, and validate designs under various conditions without the need for physical prototypes. Digital simulators vary in functionality, but they generally offer tools to simulate the logical behavior, timing, and performance of logic design circuits. In the semiconductor industry, some of the most widely used simulation tools include:

- ModelSim: A popular simulator for verifying Register Transfer Level (RTL) designs in Verilog, VHDL, or SystemVerilog, it is commonly used for functional verification and supports both simulation and debugging features.

- VCS: A high-performance simulation tool by Synopsys, commonly used for verifying RTL designs. VCS provides a wide range of features for logic simulation, including debug tools and advanced verification techniques.

- Xilinx Vivado Simulator: Used for Field Programmable Gate Arrays (FPGA) designs, Vivado includes simulation tools for verifying the functionality and timing of FPGA-based designs.

- Cadence Xcelium: A suite of verification tools that support RTL simulation, functional verification, and formal verification.

Analog Mixed-Signal (AMS) simulators are a type of simulation tool that allows engineers to model, simulate, and analyze systems that include both analog and digital components. These simulators are crucial for designs like system-on-chip (SoC), radio frequency (RF) circuits, sensor interfaces, and power management systems, where both analog signals (e.g., continuous voltage or current) and digital signals (discrete logic levels) interact. AMS simulators ensure that the analog and digital parts of a design work together as intended, verifying both functional behavior and performance under real-world conditions. Some widely used AMS tools in semiconductor industry are:

- SPICE (Simulation Program with Integrated Circuit Emphasis): SPICE simulators are widely used for analog circuit design and simulation.

- Cadence Virtuoso: A comprehensive environment for designing and simulating analog, mixed-signal, and RF circuits, it includes tools for schematic capture, simulation, and verification.

- Mentor Graphics Eldo: An analog and mixed-signal simulator used for verifying the performance of analog circuits.

- Keysight ADS (Advanced Design System): A tool primarily used for RF and high-frequency circuit design and simulation, including mixed-signal environments.

Simulation plays a crucial role in design verification by offering several key benefits. First, it is cost-effective, as it reduces the need for physical prototypes, which can be expensive, particularly in the early stages of design. Time-saving is another significant advantage, as simulations help identify issues early, preventing costly late-stage redesigns and rework. Risk reduction is also achieved through simulation, as it allows for testing designs under extreme or rare conditions—such as temperature fluctuations or power variations—that may be difficult or hazardous to replicate in real-world prototypes.

With designs becoming increasingly complex, especially in large-scale integration or mixed-signal systems, simulation is essential for handling complexity, making it the only feasible method for thoroughly verifying all aspects of a design. As designs evolve, co-simulation, which involves combining different simulators or models (such as hardware and software simulations), is becoming more common, especially for hardware/software integration. Finally, automated verification is a growing trend, with verification processes being incorporated into continuous integration pipelines, enabling automated regression testing and bug detection to ensure that designs are consistently validated throughout development.

What is emulation?

Emulation is a crucial tool in semiconductor development, enabling designers to simulate and test semiconductor devices, circuits, and systems before physically fabricating them. This approach is essential because producing actual prototypes is time-consuming and expensive. Emulation helps uncover design flaws, bugs, and unintended behaviors early in the process, reducing the risk of costly rework after fabrication. By simulating the hardware, engineers can debug and refine the design before it goes into production, and test semiconductor devices

In the case of system-on-chips (SoCs), emulation is particularly valuable for analyzing performance under different conditions, such as data throughput, power usage, and heat dissipation. This allows designers to optimize the chip’s efficiency and functionality before physical testing. Furthermore, emulation helps software developers start writing and testing software or firmware even before the hardware is available, which is vital in embedded systems where hardware and software must work together seamlessly.

Emulation also enables the simulation of potential faults, like power glitches or signal degradation, so engineers can assess how the system handles these issues, ensuring reliability and robustness. By using emulation, designers can perform extensive testing, optimize performance, and detect problems early in the design cycle, all while minimizing costs and risks associated with physical prototyping. This results in faster time to market and better-performing semiconductor products.

In the semiconductor industry, we generally refer to emulation in the following contexts:

- Software Emulation involves running software applications designed for one platform on a different platform. For example, video game emulators allow users to play games from older consoles on modern computers or devices.

- Hardware Emulation refers to creating hardware that mimics the functions of another device. For instance, FPGA devices and Palladium from Cadence can be programmed to emulate the behavior of specific chips or systems. Hardware emulation tools use dedicated hardware to synthesize and emulate device under test for simulations that can take many days to complete.

- Testing and Development Emulation is widely used in software development and testing. Developers can emulate different environments or devices to test applications without needing the actual hardware.

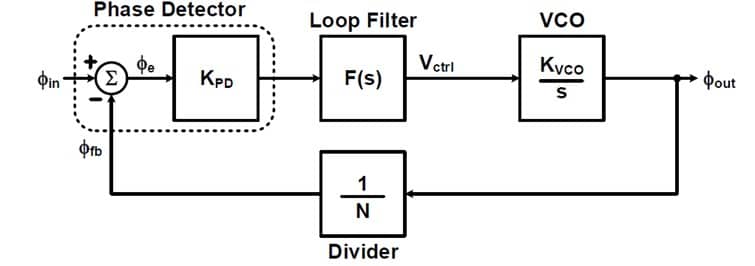

In complex dynamic systems like a Phase Locked Loop (PLL), many blocks are described using transfer functions, which require the use of difference equations to model each block accurately. This means each block requires a proper sampling frequency to achieve high accuracy and performance. Nominally the sampling frequency is a function of each individual block bandwidth and the overall system bandwidth.

A typical sampling frequency of a block is only a few orders of magnitude higher than its bandwidth. In linear time-invariant systems this is not an issue. However, in complex systems like a PLL, this is not as straightforward. As the PLL starts locking to its reference clock, the phase error duration becomes very short. Even for narrow current pulses, the charge error accumulated can yield much higher jitter response than the actual system. As illustrated in FIGURE 1, the duration of the phase error depends on the difference in time between the reference clock and the PLL feedback clock. The output generated to the loop filter is a current driven by the duration of UP and DN signals.

In order to alleviate this situation, we need to sample the charge pump output current at much higher rates than is needed by the loop filter, in order for the loop filter to generate a proper control signal to the Voltage Controlled Oscillator (VCO). This results in slower simulation and emulation speed and performance. In fact, to get a reasonable steady state response, it is sufficient to run the loop filter clock only few orders of magnitude higher than its bandwidth. For very high frequency application, nominally the jitter requirement is very tight, and running even portions of the PLL to meet such requirements can become computationally intensive.