A team of researchers including University of Massachusetts Amherst engineers have proven that their analog computing device, called a memristor, can complete complex, scientific computing tasks while bypassing the limitations of digital computing.

Many of today’s important scientific questions—from nanoscale material modeling to large-scale climate science—can be explored using complex equations. However, today’s digital computing systems are reaching their limit for performing these computations in terms of speed, energy consumption and infrastructure.

Qiangfei Xia, UMass Amherst professor of electrical and computer engineering and one of the corresponding authors of the research published in Science, explains that, with current computing methods, every time you want to store information or give a computer a task, it requires moving data between memory and computing units. With complex tasks moving larger amounts of data, you essentially get a processing “traffic jam.”

One way traditional computing has aimed to solve this is by increasing bandwidth. Instead, Xia and his colleagues at UMass Amherst, the University of Southern California, and computing technology maker TetraMem Inc. have implemented in-memory computing with analog memristor technology as an alternative that can avoid these bottlenecks by reducing the number of data transfers.

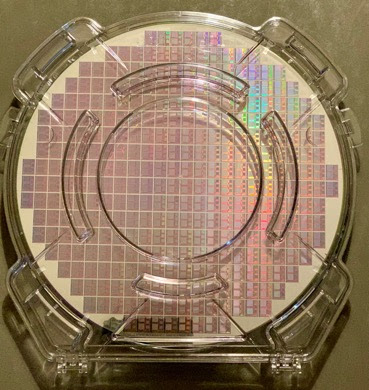

he team’s in-memory computing relies on an electrical component called a memristor—a combination of memory and resistor (which controls the flow of electricity in a circuit). A memristor controls the flow of electrical current in a circuit while also “remembering” the prior state, even when the power is turned off, unlike today’s transistor-based computer chips, which can only hold information while there is power. The memristor device can be programmed into multiple resistance levels, increasing the information density in one cell.

When organized into a crossbar array, such a memristive circuit does analog computing by using physical laws in a massively parallel fashion, substantially accelerating matrix operation, the most frequently used but very power-hungry computation in neural networks. The computing is performed at the site of the device, rather than moving the data between memory and processing. Using the traffic analogy, Xia compares in-memory computing to the nearly empty roads seen at the height of the pandemic: “You eliminated traffic because [nearly] everybody worked from home,” he says. “We work simultaneously, but we only send the important data/results out.”

Previously, these researchers demonstrated that their memristor can complete low-precision computing tasks, like machine learning. Other applications have included analog signal processing, radio-frequency sensing and hardware security.

“In this work, we propose and demonstrate a new circuit architecture and programming protocol that can efficiently represent high-precision numbers using a weighted sum of multiple, relatively low-precision analog devices, such as memristors, with a greatly reduced overhead in circuitry, energy and latency compared with existing quantization approaches,” says Xia.

“The breakthrough for this particular paper is that we push the boundary further,” he adds. “This technology is not only good for low-precision, neural network computing, but it can also be good for high-precision, scientific computing.”

For the proof-of-principle demonstration, the memristor solved static and time-evolving partial differential equations, Navier-Stokes equations and magnetohydrodynamics problems.

“We pushed ourselves out of our own comfort zone,” he says, expanding beyond the low-precision requirements of edge-computing neural networks to high-precision scientific computing.

It took over a decade for the UMass Amherst team and collaborators to design a proper memristor device and build sizeable circuits and computer chips for analog in-memory computing. “Our research in the past decade has made analog memristor a viable technology. It is time to move such a great technology into the semiconductor industry to benefit the broad AI hardware community,” Xia says.