By PIERRICK BOULAY, Market and Technology Analyst, Yole Développement (Yole), Lyon-Villeurbanne, France

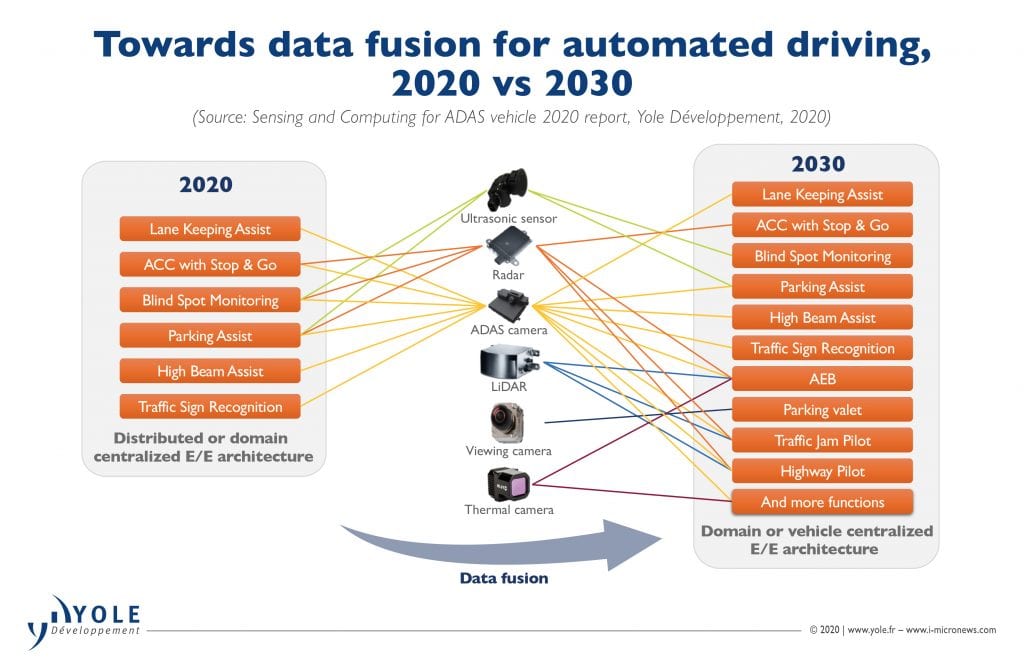

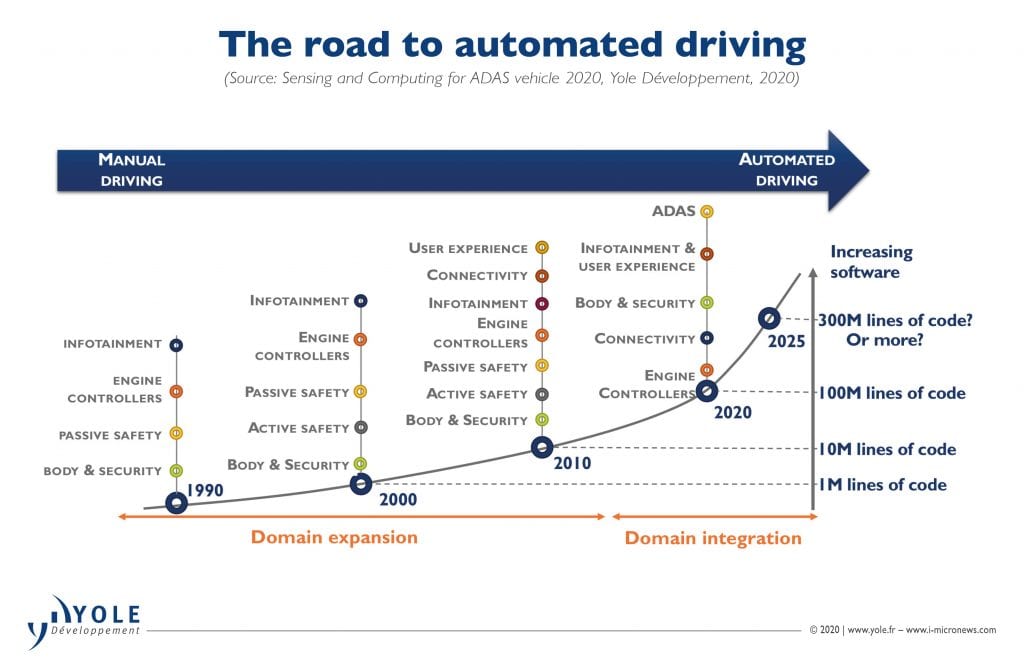

Advanced Driving Assistance Systems (ADAS) have been developed to help the driver based on a combination of sensors and electronic control units (ECUs). These systems have helped to reduce road fatalities, alert the driver to potential problems and avoid collisions.

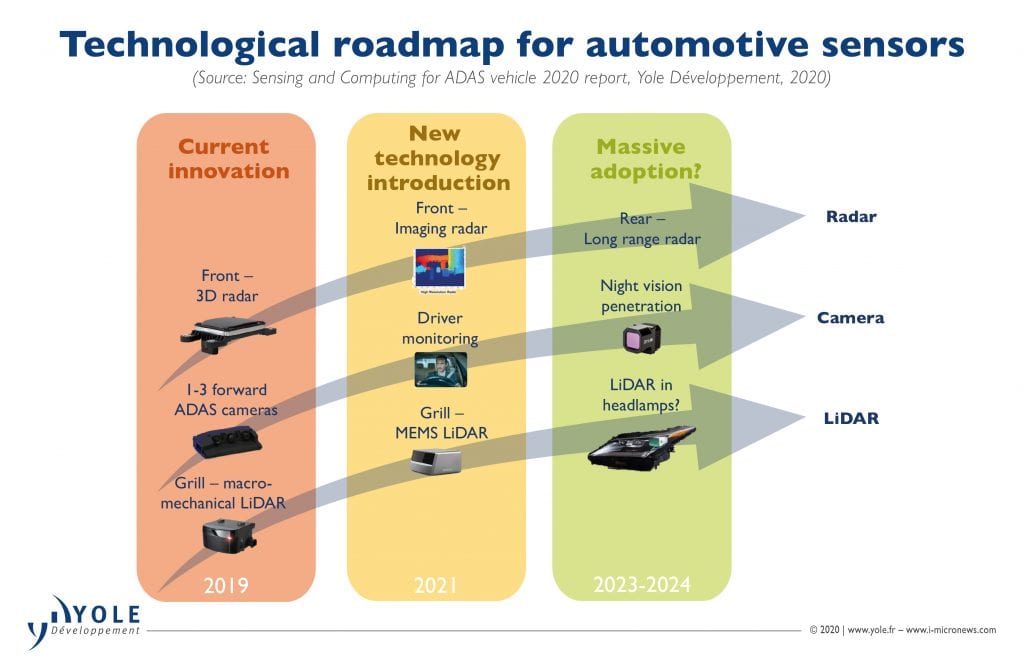

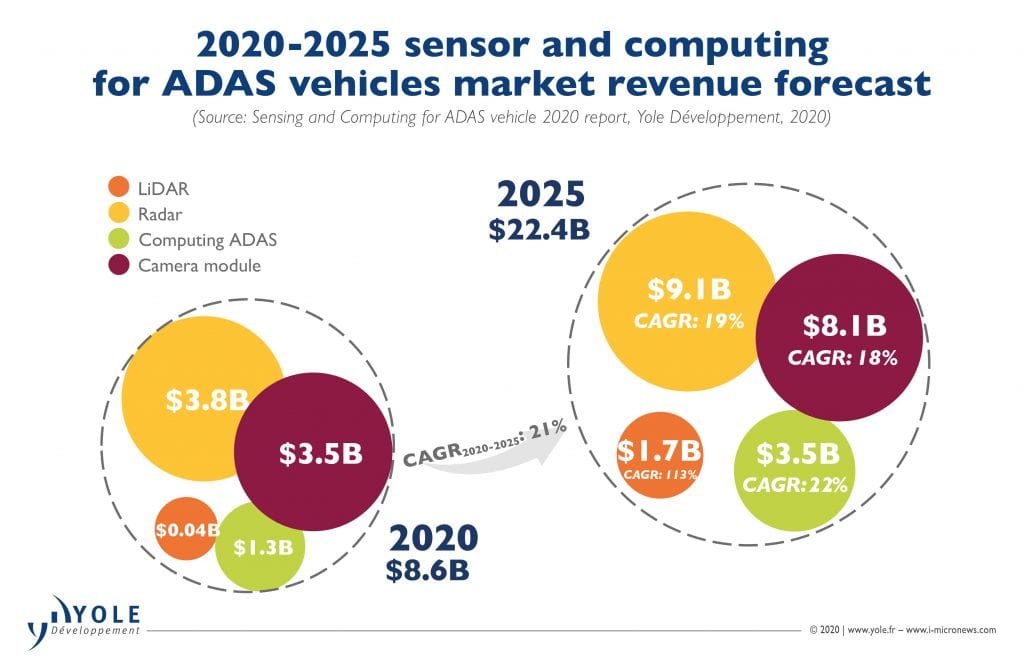

ADAS relies mostly on radars and cameras with necessary ADAS computing to process the data generated by these sensors. Recently the development of more powerful computing chips has allowed the development of more advanced functionalities. At the sensor level, some OEMs are introducing LiDAR in addition to radars and cameras (FIGURE 1).

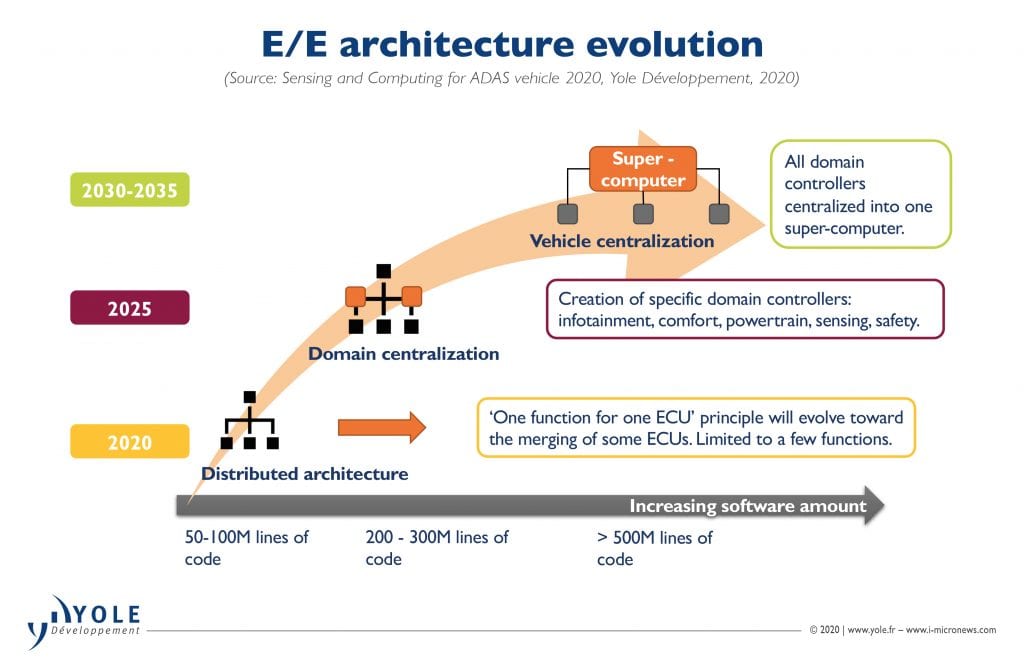

ADAS functionalities were initially developed for safety but are now also used to enable some automated driving features. The implementation of such features requires the use of more sensors, more computing power and a more complex electric/electronic (E/E) architecture.

Greater ADAS functionality will restart the industry after the coronavirus crisis

The auto industry has seen the impact of the coronavirus crisis evolve from a supply shock to a global demand shock. The production of new cars is expected to decline by 30% compared to the 2019 production level. The strategy of the automotive industry regarding the four major megatrends of connected, autonomous, shared, and electric driving is expected to remain unchanged going forward. However, the speed of adoption might change due to the crisis. Electrification will be the main focus for OEMs as restrictions and associated penalties for excessive CO2 emissions should remain in place.

The second priority for OEMs will be related to the development of ADAS for safety and automated driving features. The development of advanced emergency braking systems (AEB) is a great step to avoid forward collisions but still needs perfecting, as demonstrated by the American Automobile Association (AAA) in October 2019. Automated driving features in traffic jams or on the highway will also be developed by OEMs as consumers are looking for these to ease driving. The development of such features will be a way for OEMs to differentiate themselves.

To do so, the addition of more sensors, more computing power and a new E/E architecture will be required (FIGURE 2). Traditionally, cars were built using a distributed architecture with a one ECU for one function principle. To enable automated driving features, OEMs will have to develop smarter ECUs or domain controllers to process the data generated by multiple sensors simultaneously (FIGURE 3).

Audi and Tesla have initiated this trend using a combination of radars, cameras, and a LiDAR in Audi’s case. To fuse the data generated, Audi and Aptiv developed a domain controller, the zFAS, for front sensors. Tesla goes one step further in the development of domain controllers with its Autopilot hardware. Autopilot is much more complex and has more functionality, with the ability to perform frequent over-the-air (OTA) software updates. Innovation brought by such features will be a key differentiator for OEMs looking to relaunch the market.

A sensor market worth $22.4B in 2025, led by radar

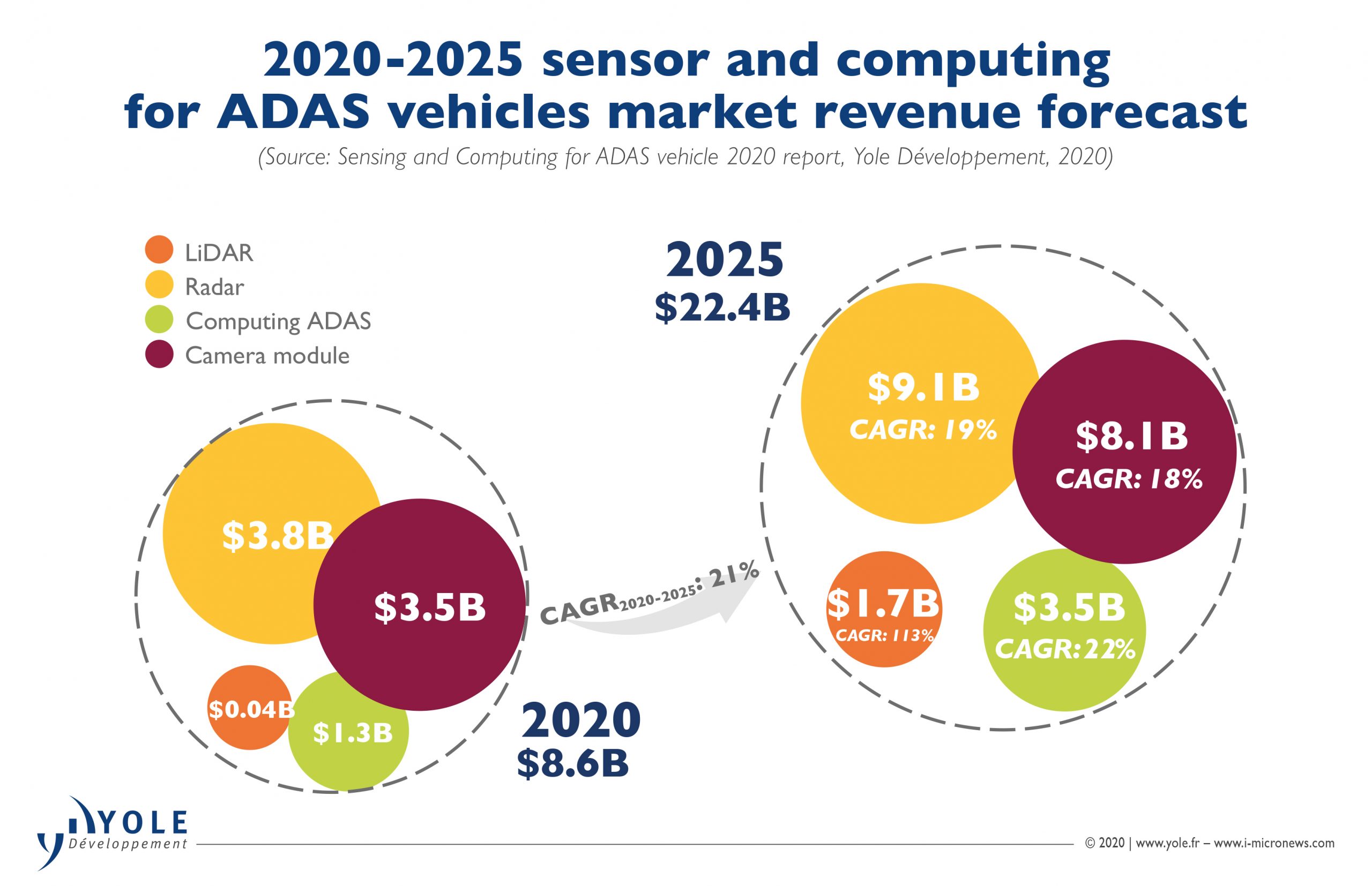

The production of vehicles will be heavily impacted by the coronavirus crisis. It is expected that three years will be needed to recover and get back to the pre-crisis level of output. In 2020, it is expected that the global market for radar, cameras, LiDAR and computing ADAS should reach $8.6B (FIGURE 4). Almost half of this market revenue will be generated by radar with $3.8B, followed by cameras with $3.5B. LiDAR will not be significant, accounting for $0.04B and computing ADAS will generate $1.3B.

With high penetration rates of radar and cameras in cars, the associated market revenues will recover rapidly from the coronavirus crisis. Radar market revenue is expected to surpass 2019’s revenue in 2021 and will reach $9.1B in 2025 at a Compound Annual Growth Rate (CAGR) of 19%. Camera market revenue will also surpass 2019’s revenue in 2021 and will reach $8.1B in 2025 at a CAGR of 18%. Market revenue from computing ADAS is expected to reach $3.5B in 2025 at a CAGR of 22%. LiDAR market revenue is quite limited today as only one OEM is implementing this sensor as an option in some of its cars. Other OEMs, such as BMW and Volvo, are expected to follow in the coming years, but the implementation will remain limited to high-end vehicles, and therefore limited volumes are expected. In this context, LiDAR market revenue is expected to reach $1.7B in 2025 at a CAGR of 113%. LiDAR is a complex sensor for OEMs and Tier-1s to integrate and radars and cameras are, at the same time, continuously improving their performance.

The ADAS sensor market is expected to continue to be led by radar and cameras for several reasons. Radar and cameras are already well integrated in a vehicle and this integration has no impact on the aesthetic of a car. The addition of such sensors should not be a difficult task for designers, contrary to LiDARs that are still bulky and difficult to integrate. Further development is needed to reduce their volume. Radar technology is still improving with the introduction of imaging radar expected for 2021, enabling a better detection and classification of objects. This is also the case with forward ADAS cameras and their associated vision processing. Latest chips enable the evolution from mono- to trifocal systems, allowing automated driving features on dedicated roads such as highways (FIGURE 5).

Better sensor performance to enable automated driving

Today, radar and cameras are the main sensors used by OEMs to develop safety and automated driving features. Consequently, the penetration rate of forward ADAS mono cameras will increase from 51% in 2020 to 85% in 2025. This type of camera is multi-purpose and is used for AEB as well as for other functionalities like Lane Keeping Assist (LKA) and Traffic Sign Recognition (TSR) in mainstream cars. For most advanced cars, forward ADAS triple cameras are used to develop advanced automated driving features like Tesla’s.

Radar is keeping pace, and the technology is continuously improving. Starting in 2019, the use of 3D radar with a better vertical field of view enabled detection of vehicle height. Radar performance will keep increasing with the implementation of imaging radar expected to start in 2021. Imaging radar will generate a 4-dimensional point cloud on which it will be possible to make use of artificial intelligence and deep learning in order to generate a more detailed picture of the scene.

On the LiDAR side, technology is moving from macro-mechanical scanning to MEMS scanning and flash. Most LiDAR manufacturers are involved in these solid-state technologies. One of the issues for LiDAR is its integration into the vehicle. Today it is integrated in the grill, but that may not be the ideal solution. Two other positions, in headlamps or behind the windshield, are targeted by Tier-1s and OEMs. To do so, more R&D will be necessary to reduce the volume of this sensor and allow its integration. Another issue for LiDAR is the need to process the large quantity of data it generates. High computing power, over 25 teraoperations per second (Tops), will be necessary to clearly distinguish and classify objects on the road such as pedestrians, cyclists, cars or any other potential danger. A final issue with LiDAR is its cost compared to the two other technologies. It is about 10 times costlier than an ADAS mono camera. Alongside size reduction, cost reduction will also be required for significant adoption by OEMs.

About the author

As part of the Photonics, Sensing & Display division at Yole Développement (Yole), Pierrick Boulay works as Market and Technology Analyst in the fields of Solid-State Lighting and Lighting Systems to carry out technical, economic, and marketing analyses. Pierrick has authored several reports and custom analyses dedicated to topics such as general lighting, automotive lighting, LiDAR, IR LEDs, UV LEDs and VCSELs. Prior to Yole, Pierrick has worked in several companies where he developed his knowledge on general lighting and on automotive lighting. In the past, he has mostly worked in R&D departments on LED lighting applications. Pierrick holds a Master’s in Electronics (ESEO – Angers, France).