By Tom Doyle

Every day it seems like a new portable voice-first device is coming to market. From smart speakers small enough to fit in your pocket to tiny wireless earbuds and voice-activated TV remote controls, we are using voice increasingly to play music, select TV shows, turn on the lights or interact with our smart thermostat. While the popularity of voice-first interfaces has spawned massive diversity in device type, as long as these devices are portable, they have one thing in common: They’re battery-powered, and that could be a problem for consumers who are tired of frequently recharging or replacing batteries.

Change the Architecture, Reduce the Power

The issue lies in the traditional hardware architectures of today’s voice-first devices, which are notoriously inefficient when it comes to power consumption. Such devices rely on a “digitize-first” model of processing voice data in which the heaviest power-consumers, like the analog-to-digital converter (ADC) and the digital signal processor (DSP), do all the heavy lifting up front, right at the start of the audio signal chain. They continuously digitize and analyze 100% of the ambient sound data as they search for a wake word, even if speech is not present and the only sound is noise. Because voice is spoken randomly and sporadically, that continuous digitization of sound wastes up to 90% of battery power.

To tackle the battery drain in portable voice-first devices, we need look no further than the human brain. Our brain processes sound very efficiently. Imagine that you are outside your house having a conversation with your neighbor. You are able to focus on what your neighbor is saying because your brain can differentiate between sounds that it should send to the deeper brain for speech processing and sounds that it shouldn’t bother processing further (e.g., dog barks, sirens or car traffic). The brain spends minimal energy up front to decide whether it should spend additional energy on processing down the line. In other words, it saves the most power-intensive processing only for the important sounds.

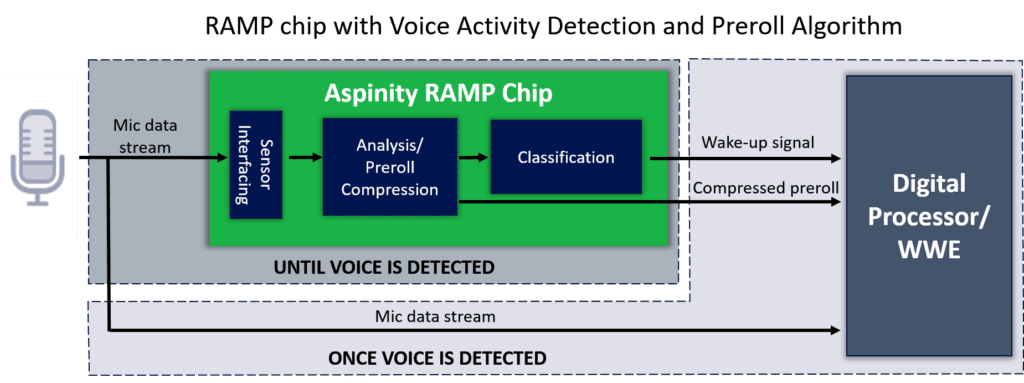

We can mimic the brain’s approach to signal processing by enabling a new “analyze-first” architecture for voice-first devices. This analyze-first approach requires ultra-low-power analog processing technology that can differentiate voice from noise before the sound data is digitized. This keeps the higher-power capabilities in a voice-first system, such as the wake-word engine, in a low-power mode when just noise is present. This approach only wakes up the higher-power chips in the system, e.g., the DSP or ADC, when it detects speech. Like our brain, a voice-first system uses an analyze-first architecture to conserve energy most of the time, saving the heavy lifting, i.e., the wake-word listening, for times when speech is present.

This architectural shift to analyze-first is well worth the investment because it reduces the system’s power consumption in a battery-powered voice-first device by up to 10x. That’s the difference between a portable smart speaker that runs for a month on battery instead of a week or smart earbuds that last for a whole day instead of a few hours on a single charge. Longer battery life in portable voice-first devices generates more good will among consumers, creating another key differentiator for manufacturers engaged in the ultra-competitive race for more users.

For more information on the analyze-first architectural approach to voice-first devices, please view our video.

Tom Doyle is CEO and founder of Aspinity. He brings over 30 years of experience in operational excellence and executive leadership in analog and mixed-signal semiconductor technology to Aspinity. Prior to Aspinity, Tom was group director of Cadence Design Systems’ analog and mixed-signal IC business unit, where he managed the deployment of the company’s technology to the world’s foremost semiconductor companies. Previously, Tom was founder and president of the analog/mixed-signal software firm, Paragon IC solutions, where he was responsible for all operational facets of the company including sales and marketing, global partners/distributors, and engineering teams in the US and Asia. Tom holds a B.S. in Electrical Engineering from West Virginia University and an MBA from California State University, Long Beach. For more information, visit www.aspinity.com.

Aspinity is a member of SEMI-MEMS & Sensors Industry Group, which connects the MEMS and sensors supply network, allowing members to address common industry challenges and explore new markets.